On this page

The enterprise is entering uncharted territory. AI agents, autonomous systems that can browse the web, execute code, access databases, and interact with third-party services, are no longer experimental. They’re being deployed at scale. And they’re creating a security challenge that traditional identity and access management was never designed to handle.

The question isn’t whether AI agents will become part of your workforce. It’s whether you’re prepared to secure them when they do.

From Build 2025 through Ignite 2025, Microsoft has delivered the largest expansion of Entra capabilities to date, extending Zero Trust principles to AI workloads. This article examines Microsoft’s approach to securing the agentic workforce and provides a practical roadmap for security leaders navigating this new frontier.

The Agentic AI Security Problem

Traditional security models assume a human is behind every action. Authentication happens once, authorization is relatively static, and audit trails follow predictable patterns. AI agents break all of these assumptions.

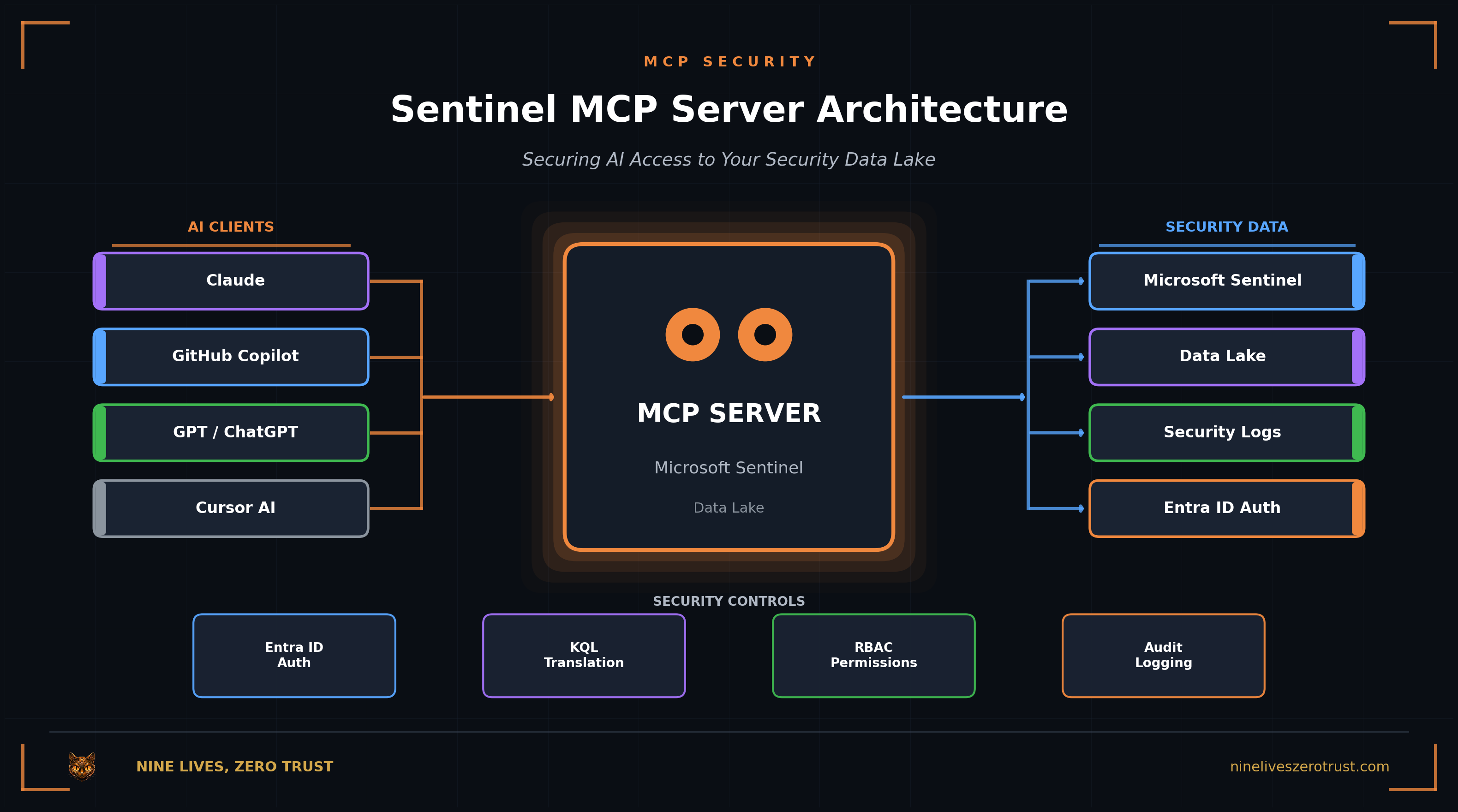

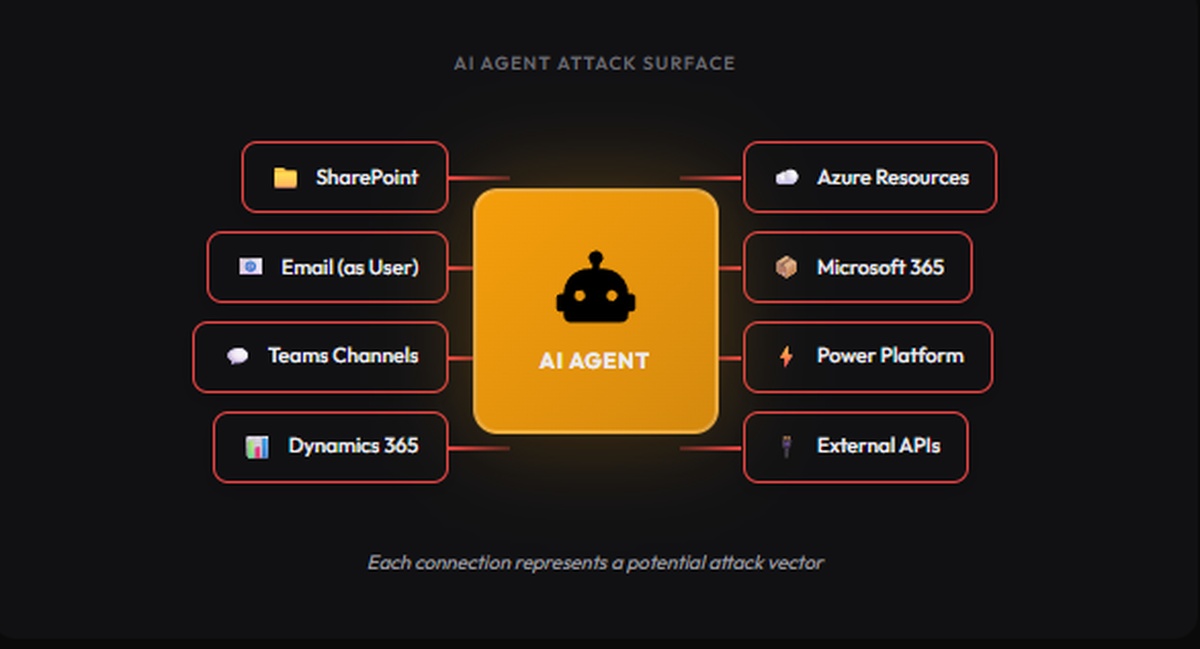

Consider what a modern AI agent can do: access SharePoint, send emails as users, post to Teams channels, query Dynamics 365, provision Azure resources, interact with Microsoft 365, trigger Power Platform workflows, and call external APIs.

Each of these capabilities represents an attack surface. An AI agent with overly broad permissions becomes a force multiplier for attackers. A compromised agent with access to your SharePoint, Dynamics, and Teams isn’t just a breach. It’s a catastrophe.

Before you can securely manage, protect, and govern this new type of identity, you need visibility. Then you need the right controls, because agent sprawl can quickly lead to excessive permissions, orphaned accounts, and increased risk.

Microsoft’s Answer: Entra Agent ID

Microsoft first previewed Microsoft Entra Agent ID at Build 2025, then significantly expanded its capabilities at Ignite 2025. Microsoft positions it as an enterprise-grade identity and access management solution purpose-built for AI agents. Think of it like etching a unique VIN into every new car and registering it before it leaves the factory.

Unique Agent Identities

Every agent gets a unique identity in your Entra directory, just like employees

Complete Fleet Inventory

Discover and manage your entire agent fleet via a unified directory

Conditional Access

Same Zero Trust controls that protect human users now apply to agents

Lifecycle Management

Enforce policies from creation to decommissioning, preventing orphaned agents

When Entra Agent Identity is enabled, agents built in Microsoft Copilot Studio and Azure AI Foundry automatically receive an Entra Agent ID. This gives companies better control over what each agent is allowed to do, reducing the risk of data leaks or unauthorized actions.

Agent 365: The Governance Platform

Ignite 2025 also introduced Microsoft Agent 365, a brand-new governance platform built to manage AI agents across an organization. While Entra Agent ID provides the identity foundation, Agent 365 serves as the control plane for discovery and observability. Agent 365 is initially available through Microsoft’s Frontier early access program.

Discovery

Find all agents in your environment, including shadow agents

Identity

Assign Entra Agent ID with unique credentials

Policy

Apply Conditional Access and governance rules

Monitor

Real-time security monitoring with Defender integration

This essentially treats non-human agents with the same identity rigor as employees. The Agent Registry maintains a complete inventory (including shadow agents), enforces lifecycle policies, and protects agent access to resources with Conditional Access.

Conditional Access for Agent Identities

One of the most powerful announcements from Ignite 2025 is Conditional Access for Agent ID. This extends the same Zero Trust controls that already protect human users and apps to your AI agents.

✅ Allow

Trusted agent, approved context, low-risk action

⚠️ Escalate

Request verification or human approval (via workflow)

⚠️ Limit Access

Grant read-only or time-limited permissions

🚨 Block

Deny access, alert security team

Example: Agent Risk in Action

Development agent tries to access production SharePoint → Blocked (attribute mismatch). High-risk agent detected by ID Protection → Blocked + alert. Agent accessing sensitive data → Escalated to human approval.

Conditional Access treats agents as first-class identities and evaluates their access requests the same way it evaluates requests for human users or workload identities, but with agent-specific logic. Every interaction is authenticated and authorized, following the principle of “never trust, always verify.”

Security Copilot Agents

Microsoft is expanding Security Copilot with 12 new Microsoft-built agents across Defender, Entra, Intune, and Purview, plus more than 30 partner-built agents. Here are highlights:

| Agent | Product | Function |

|---|---|---|

| Phishing Triage Agent | Defender | Triage and classify user-submitted phishing incidents |

| Threat Intelligence Briefing Agent | Defender | Curate threat intel based on your unique exposure |

| Conditional Access Optimization Agent | Entra | Find policy gaps and recommend quick fixes |

| Vulnerability Remediation Agent | Intune | Prioritize remediation with AI-driven risk assessments |

| Device Offboarding Agent | Intune | Identify stale devices and recommend offboarding |

| DLP/Insider Risk Alert Triage Agents | Purview | Prioritize highest-risk data security alerts |

These agents are purpose-built for security, learn from feedback, adapt to workflows, and operate securely aligned to Microsoft’s Zero Trust framework. Security Copilot is being rolled out to Microsoft 365 E5 customers.

Responsible AI Controls in Azure AI Foundry

For organizations building custom agents, Microsoft is putting responsible AI features in public preview within Azure AI Foundry:

Task Adherence

- Keep agents aligned with assigned tasks

- Prevent scope creep

- Detect off-task behavior

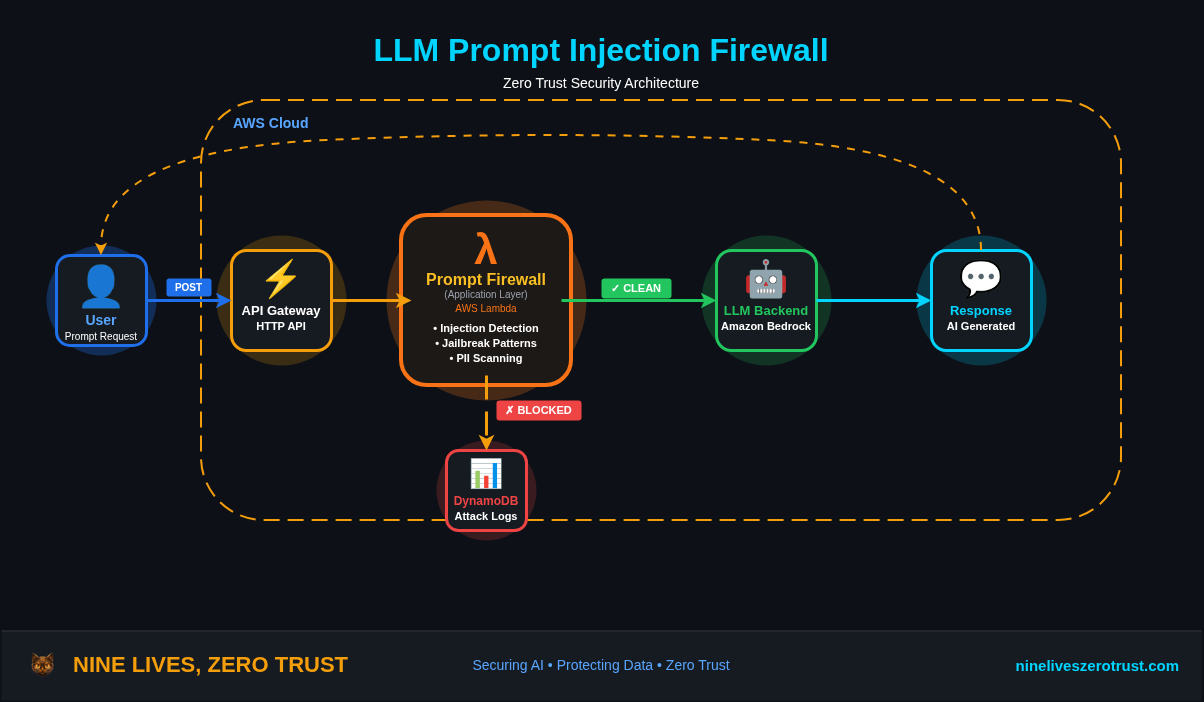

Prompt Shields

- Protect against prompt injection

- Spotlight risky behavior

- Jailbreak detection

PII Detection

- Identify sensitive data

- Manage data exposure

- Compliance enforcement

Purview Integration

- Data security controls

- Compliance policies

- Prevent oversharing

Microsoft Purview’s data security and compliance controls are now enabled natively for AI agents built within Azure AI Foundry and Copilot Studio. This means agents can inherently benefit from robust data security capabilities, reducing the risk of oversharing or leaking data.

Real-Time Security Monitoring

Copilot Studio security capabilities now include integration with trusted real-time monitoring solutions during agent runs. Admins can opt to run:

- Microsoft Defender for native threat detection and blocking of unsafe actions (where configured)

- Third-party security platforms for specialized monitoring

- Custom tools for organization-specific requirements

Basic audit logging for jailbreak and prompt injection events is generally available. Advanced real-time protection during agent runtime, including automatic blocking of suspicious behavior, is in public preview.

Enterprise Partnerships

Microsoft isn’t going it alone. Strategic partnerships extend Entra Agent ID across the enterprise ecosystem:

These partnerships mean that AI agents operating across your ServiceNow workflows or Workday processes can be governed with the same identity controls as agents built natively in Microsoft’s ecosystem.

Implementation Roadmap

Discovery

- Deploy Agent 365

- Inventory all agents

- Identify shadow agents

- Assess current permissions

Identity Foundation

- Enable Entra Agent ID

- Assign identities to agents

- Configure Agent Registry

- Define lifecycle policies

Access Controls

- Deploy Conditional Access

- Configure risk-based policies

- Enable Purview integration

- Set up monitoring

Operations

- Integrate with Defender

- Deploy Security Copilot agents

- Enable continuous evaluation

- Establish governance reviews

The Security Gap Is Your Opportunity

The Visibility Gap

Most organizations don't know how many agents are in their environment. Those that establish visibility and governance now will move faster as agentic AI matures. The agentic era isn't coming. It's here.

Conclusion

AI agents are the new workforce, and they need Zero Trust too. Microsoft has recognized this reality and delivered a comprehensive framework for securing autonomous AI systems.

Microsoft Entra Agent ID provides first-class identity management for AI agents, treating them with the same rigor as human employees. Agent 365 delivers the governance platform for discovery and control. Conditional Access for Agents extends proven Zero Trust controls to this new identity type.

For organizations already invested in the Microsoft ecosystem, the path forward is clear: start with discovery, build the identity foundation, and layer on the controls. The key is starting now, while this security gap still represents an opportunity rather than a liability.

References

Microsoft Learn. “Microsoft Entra Ignite 2025: Key Announcements and Updates.” November 2025.

Microsoft Security Blog. “Microsoft extends Zero Trust to secure the agentic workforce.” May 2025.

Microsoft Learn. “Conditional Access for Agent Identities in Microsoft Entra.”

Microsoft Learn. “Microsoft Entra Agent ID documentation.”

Microsoft Security Blog. “Microsoft unveils Microsoft Security Copilot agents and new protections for AI.” March 2025.

Microsoft Tech Community. “Microsoft Entra: What’s New in Secure Access on the AI Frontier.”

Microsoft Official Blog. “Beware of double agents: How AI can fortify or fracture your cybersecurity.” November 2025.

Microsoft Tech Community. “Zero-Trust Agents: Adding Identity and Access to Multi-Agent Workflows.”

Microsoft 365 Blog. “Microsoft Agent 365: The control plane for AI agents.” November 2025.

IDC Info Snapshot (sponsored by Microsoft). “1.3 Billion AI Agents by 2028.” #US53361825, May 2025.

Microsoft Ignite 2025. “Book of News.”

Jerrad Dahlager, CISSP, CCSP

Cloud Security Architect · Adjunct Instructor

Marine Corps veteran and firm believer that the best security survives contact with reality.

Have thoughts on this post? I'd love to hear from you.